4. Your validation strategy

Verification, usability validation, analytical validation, & clinical validation

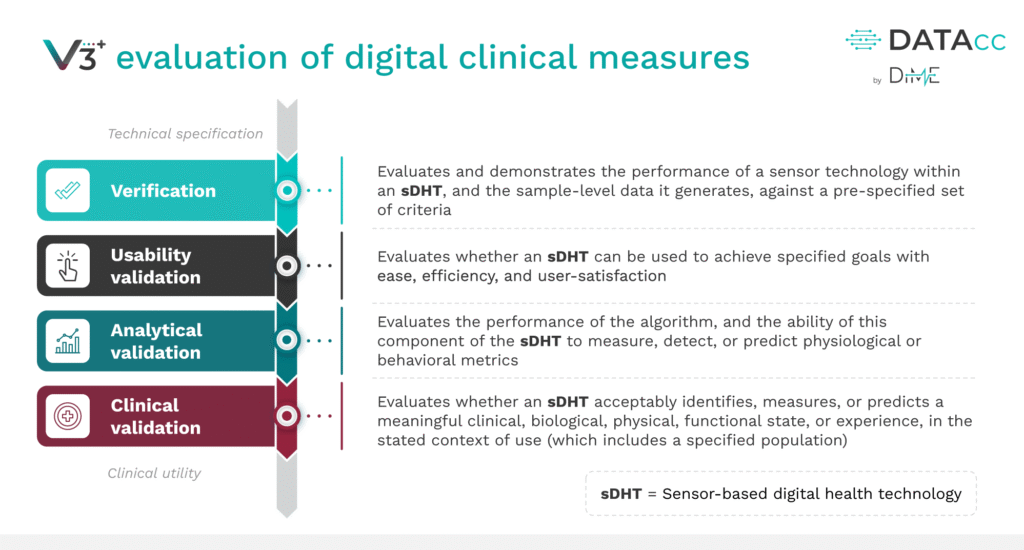

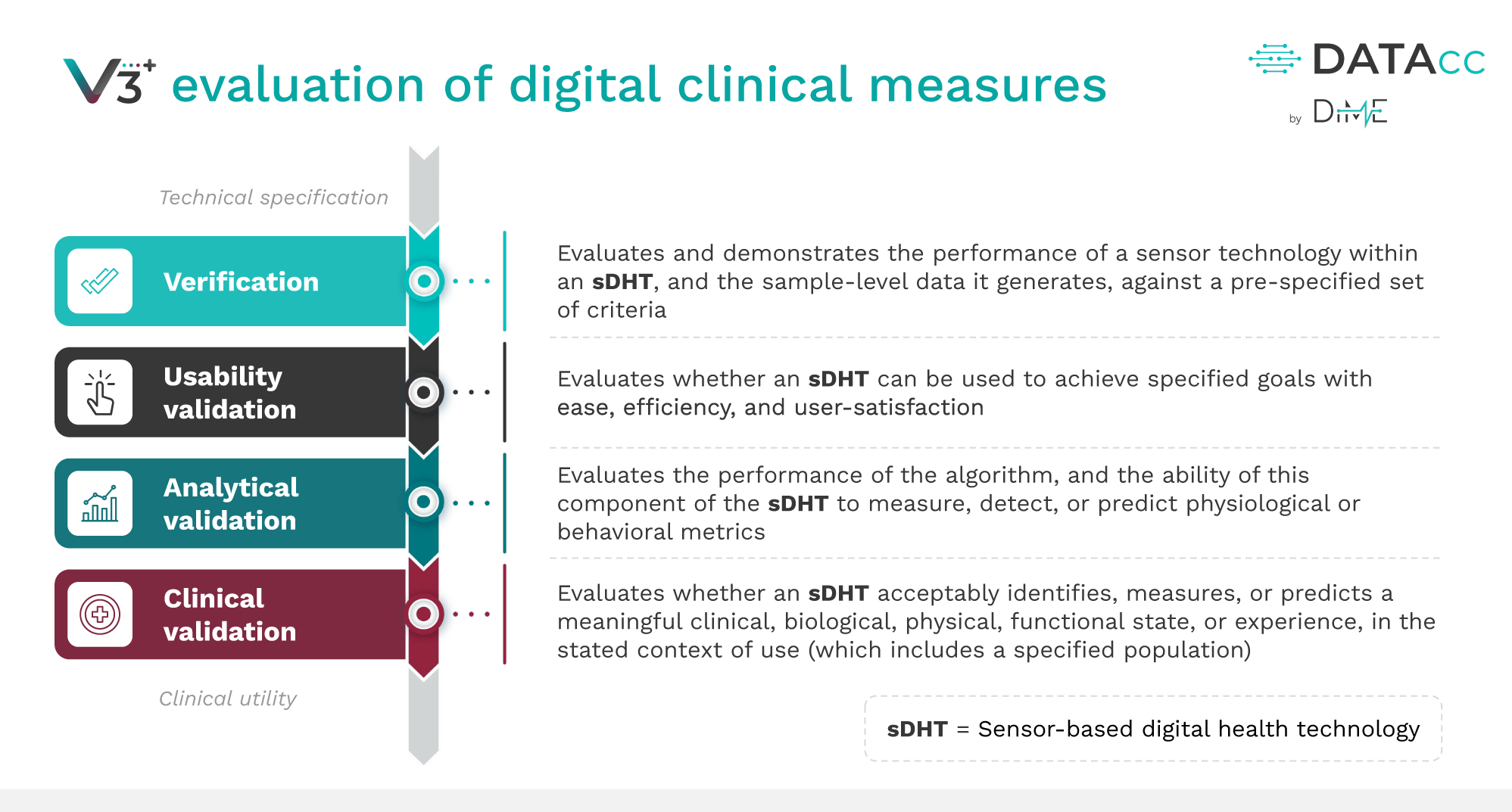

We’ve been referencing elements of the V3+ framework—verification, usability validation, analytical validation, clinical validation—throughout the roadmap without fully delving into what each entails. Let’s take a moment to ensure a thorough understanding of this industry standard framework.

Developers and adopters should feel confident they can systematically demonstrate that digital clinical measures, and the tools collecting them, are fit-for-purpose, documenting that the sDHT meets technical specifications, produces accurate and meaningful data, and can be used reliably by the target population.

FDA SPOTLIGHT

“Fit-for-Purpose: A conclusion that the level of validation associated with a medical product development tool is sufficient to support its context of use.”

– Appendix 2 (Glossary), p. 37, Patient-Focused Drug Development: Collecting Comprehensive and Representative Input, Final, 2020 (FDA)

OVERVIEW

The V3+ framework

Build your evidence

Implement the V3+ framework to build a body of evidence that satisfies regulatory requirements and instills confidence among all stakeholders:

Developers

Clinical trial sponsors

Regulators

Patients

Payors

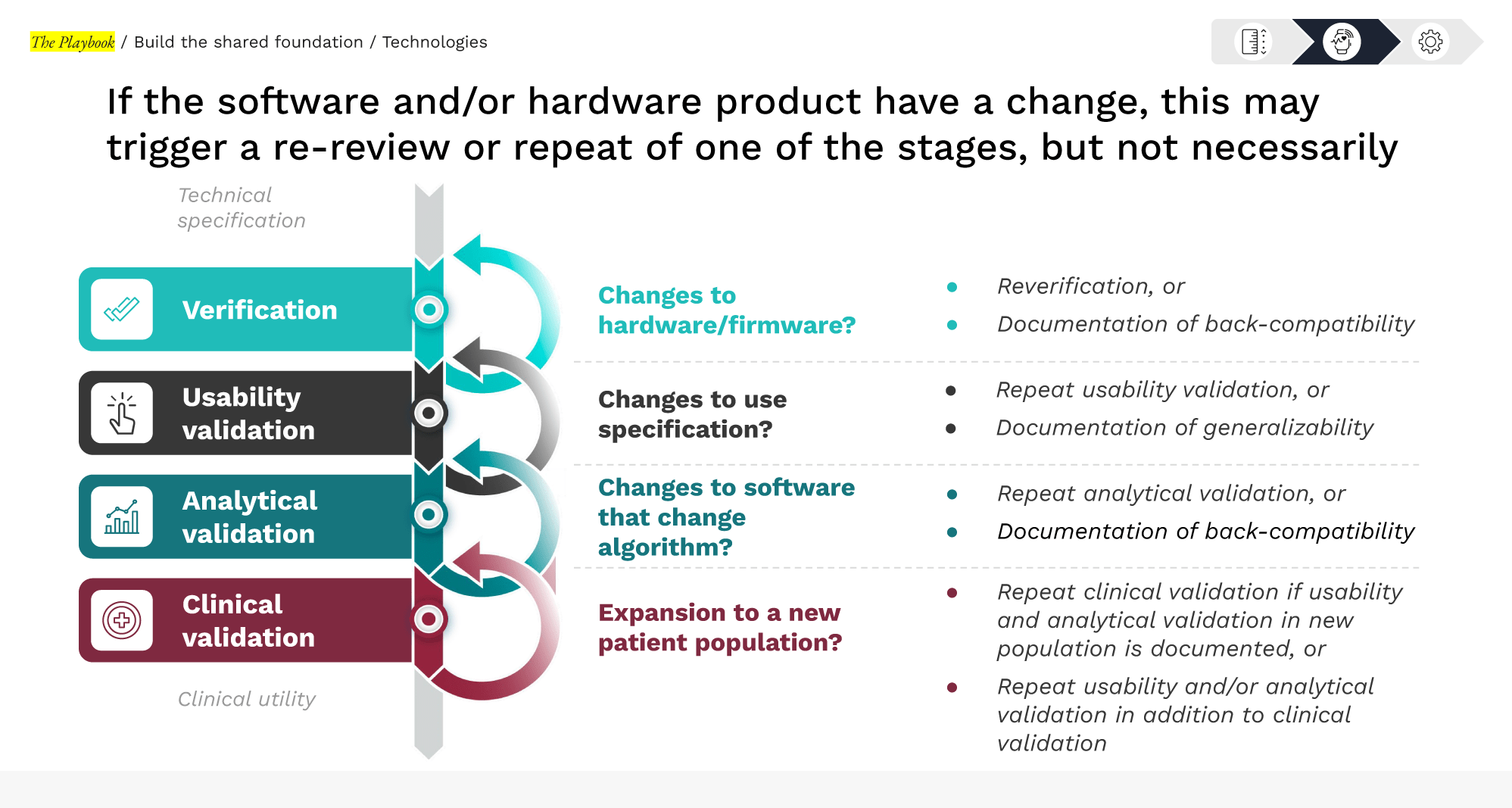

Each domain of the framework addresses distinct questions about the sDHT and the digital endpoints it will capture:

[Verification]

Does the sensor hardware accurately and reliably capture the raw data it’s supposed to?

Verification evaluates the performance of the DHT’s sensor(s) against pre-specified technical criteria.

[Usability validation]

Can the intended users (patients, caregivers, study staff) use the technology as intended, easily and safely, to collect high-quality data?

Usability validation ensures the DHT can be used with efficiency, ease, and user satisfaction at scale, without introducing errors or burden that could undermine data quality.

[Analytical validation]

Does the algorithm transform that signal into a trustworthy measure?

Analytical validation evaluates the performance of an algorithm to convert sensor outputs into physiological metrics using a defined data capture protocol in a specific subject population. It is typically conducted with human subject data and involves comparing the sDHT’s digital measurements against an appropriate reference standard.

[Clinical validation]

Does the digital measure meaningfully reflect or predict a clinical state or outcome in the real-world context?

Clinical validation assesses whether the sDHT-derived outcome is associated with a meaningful clinical or functional state in the target patient population and use context.

IN PRACTICE

This stage requires investment and collaboration

Developers and adopters must collaborate, often with regulators and patient communities, to share the validation burden and align on evidentiary standards. The payoff is substantial: a well-validated digital measure can accelerate clinical trials, improve patient outcomes, and provide a competitive edge in a field that increasingly values quality and trustworthiness of sDHTs.

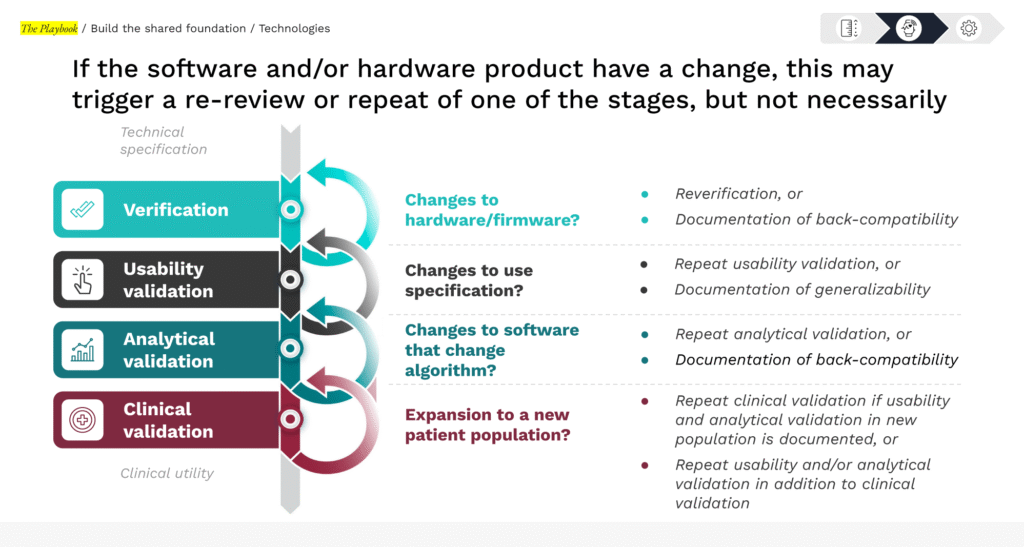

Validation is a modular and iterative process

Once verification, usability, and analytical validation are established, expanding to a new context of use often requires only a targeted or partial clinical validation to confirm performance in the new population or setting—without repeating all prior work.

Source: Verification, analytical validation, and clinical validation (V3), Extending V3 with Usability Validation, Playbook team analysis

Check your V3+ readiness

PRO TIP FOR DEVELOPERS

See example use cases in The Playbook: Digital Clinical Measures (DiMe)

Not sure if your validation plan will meet FDA expectations? If major gaps were flagged in your maturity assessment, consider informal feedback mechanisms to confirm you’re on the right track (see Section 3: Engage Regulators)

SECTION 4 | YOUR VALIDATION STRATEGY

Ready to dive in?

The following sections detail each validation domain, explaining the requirements, methods, and strategic value, along with practical considerations for collaboration and burden-sharing between technology developers and adopters.

Confirm hardware and firmware reliability to establish a solid foundation for all downstream evidence.

Evaluate real-world performance to ensure intended users can operate the sDHT safely and effectively, protecting participants and data quality at scale.

Assess the algorithm’s ability to transform sensor data into trustworthy digital measures by comparing performance against appropriate reference standards.

Evaluate whether digital measures meaningfully reflect or predict clinically relevant states or outcomes within the target patient population.