4.3 Analytical validation

Analytical validation evaluates whether the algorithm that processes the sensor’s sample-level data is producing a correct, accurate, and reliable measure of the physiological or behavioral phenomenon of interest.

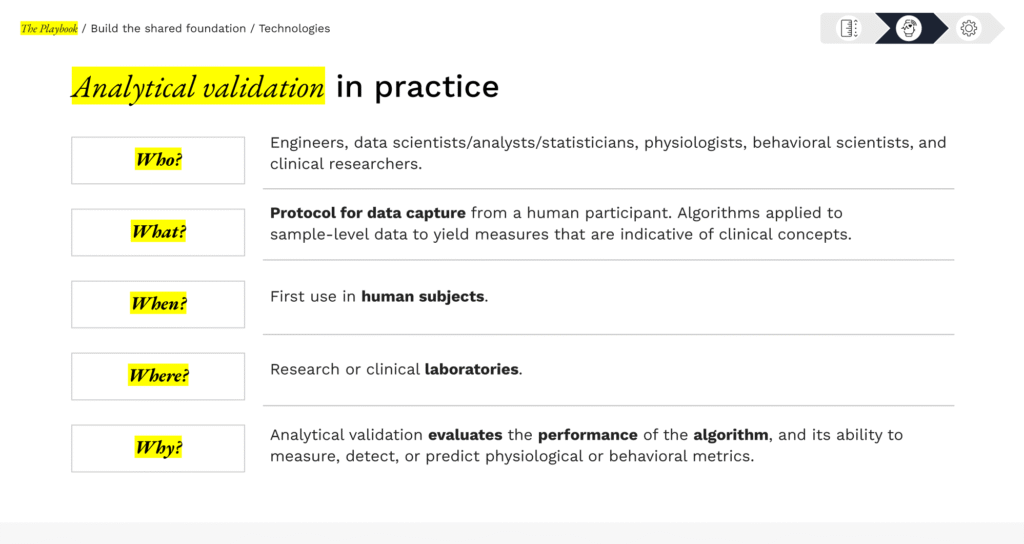

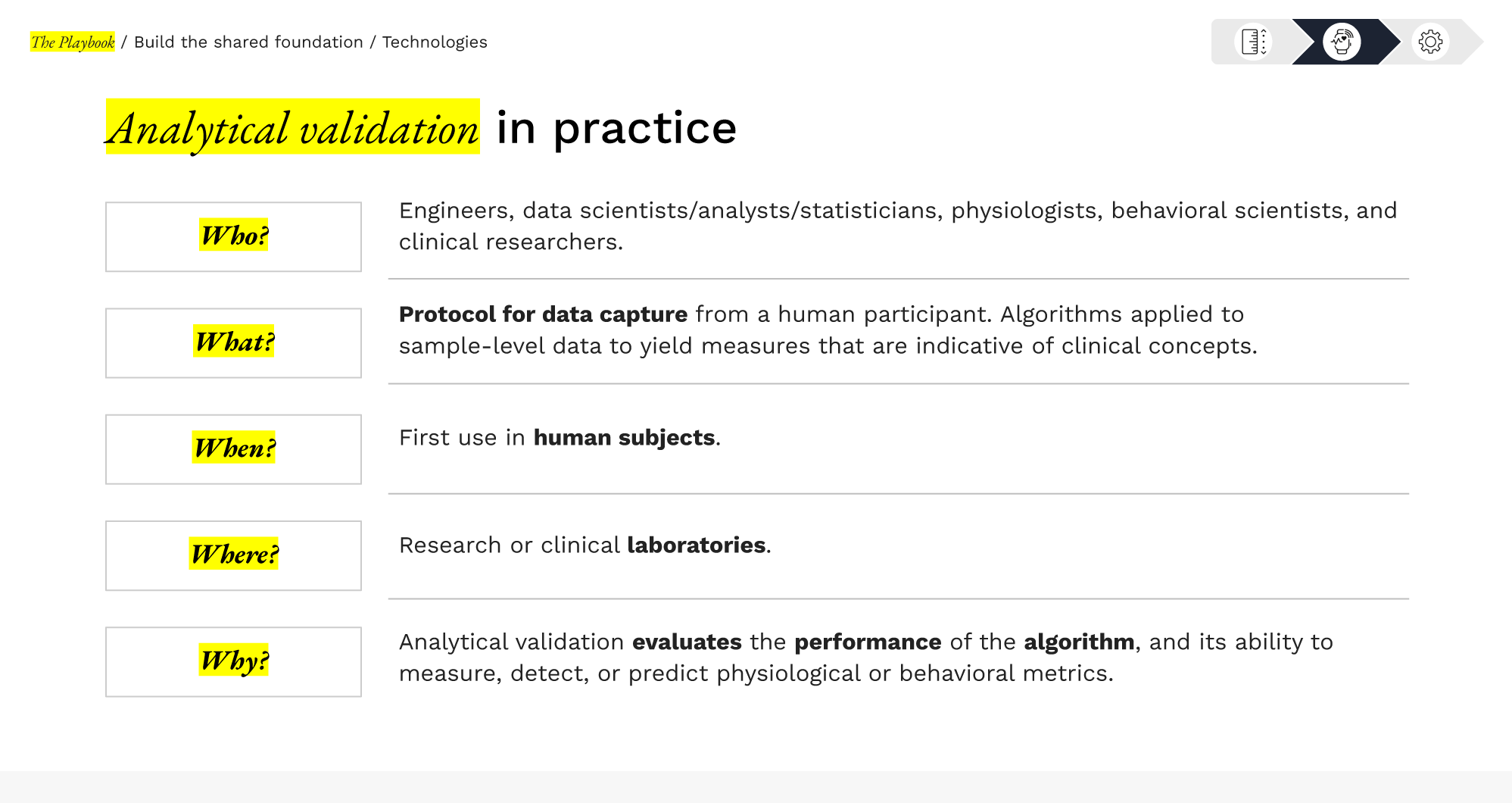

This step connects the engineering world to the data science realm – it’s about confirming that the sDHT’s signal processing or predictive model works as intended. Analytical validation is typically conducted with human subject data (since algorithms interpret human-generated signals) and involves comparing the sDHT’s digital measurements against an appropriate reference standard.

This is crucial to ensure that measurement error is minimized and that the digital outcome can be trusted quantitatively (e.g., a step count algorithm actually counts steps accurately, a gait speed algorithm truly measures gait velocity, etc.). It sets the stage for clinical interpretation by establishing the algorithm’s scientific soundness.

In practice

Methods & key considerations

Conducting a high-quality analytical validation requires careful experimental design and statistical rigor:

PRO TIP

Detecting and addressing algorithmic bias is a core component of analytical validation

Analytical validation must evaluate whether your algorithm performs appropriately in your intended population. This means you need to stratify your results by key demographic and clinical characteristics to detect potential algorithmic bias.

How to operationalize this:

- Report algorithm performance (accuracy, bias, limits of agreement) separately for relevant subgroups. For example, investigate performance stratified by race, ethnicity, sex, age, body composition, disease severity, and comorbidities.

- Compare subgroup performance against your pre-specified acceptance criteria. Does every group meet the threshold?

- If you detect differential performance: Document it transparently, investigate root causes (sensor limitations vs. algorithm design), and determine whether the algorithm remains fit-for-purpose for all intended users or requires modification.

Teams should also examine whether race-based adjustments have been explicitly embedded in the algorithm itself. Please visit DiMe’s Removing harmful race-based clinical algorithms toolkit which provides methods to identify and remove harmful race-based proxies.

You will also find this topic in The Playbook: Section 2.4(a) Industry Considerations—Ethics.

Strategic value

Analytical validation is a prerequisite for regulatory and clinical acceptance. In short, this process establishes the credibility of the digital measure by demonstrating it is equivalent, or close to equivalent, to an accepted measure.

For developers, a well-executed analytical validation is a differentiator – it shows your algorithm has quantifiable performance against an accepted reference standard.

It also enables transparent communication of measurement accuracy, performance limits, and underlying assumptions, supporting reuse of evidence across studies and more efficient engagement with adopters and regulators.

For adopters, analytical validation informs how a digital measure can be used in practice within a study. Understanding algorithm performance relative to a reference helps adopters anticipate variability, set appropriate thresholds for change, and assess whether the algorithm can reliably derive a digital measure.

Stakeholder roles & collaboration

Typically, the technology developer or algorithm owner leads analytical validation efforts, since they have the data science expertise and access to the algorithm for testing. However, collaboration is highly beneficial. A multi-disciplinary team – data scientists, engineers, clinicians, statisticians – should work together. For example, if an adopter wants to use an activity sensor as an endpoint, they might partner with the developer and perhaps an academic center to conduct a validation study. The developer provides technical know-how and sDHT access; the sponsor provides understanding of the patient population and clinical endpoints; statisticians ensure rigorous analysis. This kind of co-investment can accelerate the process and share the resource burden.

Notably, analytical validation data can sometimes be reused or shared: if one company validates a sensor’s gait metric in multiple sclerosis patients, another company might leverage that evidence for a similar context (provided the use conditions match).

However, caution is warranted in generalizing validation data. Evidence may not fully transfer to a new population or slightly different use – scientific judgment is needed to decide if a new analytical validation study is required. Regulators will expect justification if you rely on prior validation data in a new context. Generally, if the hardware, algorithm, and population remain the same, you need not repeat analytical validation for every new trial. But any significant change (new sensor version, new patient population, etc.) means you may need to generate additional validation evidence.

PRO TIP FOR DEVELOPERS

Design analytical validation with future regulatory submissions in mind. Engage regulators early (e.g., via an FDA Q-Submission meeting) to get feedback on your validation plan and ensure it will meet their expectations (see Section 3: Engage regulators).

Aligning on the choice of reference standard, statistical methods, and success criteria with regulatory advisors can prevent costly do-overs. Additionally, consider publishing your analytical validation study in a peer-reviewed journal to build credibility and transparency.

4.3 Analytical validation

Library resources to guide you

The sDHT roadmap library gathers 200+ external resources to support the adoption of sensor-based digital health technologies. To help you apply the concepts in this section, we’ve curated specific spotlights that provide direct access to critical guidance and real-world examples, helping you move from strategy to implementation.

Features essential guidance, publications, and communications from regulatory bodies relevant to this section. Use these resources to inform your regulatory strategy and ensure compliance.

Gathers real-world examples, case studies, best practices, and lessons learned from peers and leaders in the field relevant to this section. Use these insights to accelerate your work and avoid common pitfalls.