5.1 Data matters: Architecture, privacy, and oversight

The technical heart of sDHT adoption

It is vital to establish a secure, standards-compliant data infrastructure that ensures data integrity and preserves participant privacy throughout the trial lifecycle.

Moving continuous, remote data from the sensor to the analysis repository is complex; a trustworthy pipeline requires meticulous planning and adherence to the fundamental principles of ALCOA+ (Attributable, Legible, Contemporaneous, Original, Accurate, and Enduring, plus Complete) and implementation of rigorous security controls throughout the data lifecycle.

OVERVIEW

Architecture for sDHT data infrastructure

The foundation of a successful data strategy lies in recognizing that sDHT architecture is organized around a series of core functions applied to data as it moves from capture at the edge to actionable insight at the application layer.

This end-to-end flow can be examined through a five-layer conceptual model, clarifying the shared responsibilities between developers and adopters at each step.

Layer 1 — sDHT capture (edge)

This layer includes all hardware responsible for collecting raw signals—such as wearables, ambient sensors, or implantables. It is the foundation of the data pipeline, and its reliability directly impacts all downstream layers.

- sDHT capture: Data should be generated under defined technical conditions that are appropriate for the intended measurement, ensuring signals are captured consistently and within specified operating parameters. At the point of capture, sufficient contextual information must be preserved to support traceability and downstream interpretation.

ALCOA+ principles:

Attributable: Assigns unique identifiers and metadata to trace data back to its source and participant.

Contemporaneous: Captures and timestamps data in real-time.

Original: Preserves raw, unaltered data as the primary record at the point of capture.

Roles

Developer: Validate sensor performance and physical durability (verification). Lock and manage the sDHTs firmware and software version provenance.

Adopter: Approve the missingness policy, accounting for sensor limitations as well as factors like device usability and connectivity variations.

Layer 2 — Secure acquisition (ingest & landing)

This layer covers the secure transmission of data from the sDHT to the next processing node, such as a smartphone, hub, or server.

- Transmission security: Data should be encrypted during transfer and protected against data loss in areas with intermittent connectivity.

- Trustworthy records: Upon landing on the server, the data must be encrypted and immediately time-stamped and stored in an immutable, time-ordered landing store to support trustworthy electronic records and demonstrate data is contemporaneous.

ALCOA+ principles:

Attributable: Logs user and system actions during ingestion for traceability.

Contemporaneous: Applies immediate timestamps upon data arrival to record timing accurately.

Original: Maintains certified copies of raw data, ensuring no alterations during transfer.

Roles

Developer: Implement secure, encrypted transmission and authenticated interfaces. Provide logging for audit trails and server-side receipt validation.

Adopter: Approve data loss mitigation strategies (e.g., buffering during offline periods). Ensure the repository meets data retention regulations (21 CFR Part 11/312).

Layer 3 — Processing & conditioning (transform & curate)

In this layer, raw or minimally processed signals are transformed to support downstream analysis (e.g., through cleaning, normalization, feature extraction, or artifact handling). Depending on the system architecture, some of these processing steps may occur earlier in the pipeline, but this layer represents where transformation logic is defined, versioned, and governed. Processing may occur locally (on-sDHT or another connected edge device) or in the cloud. Version control is critical, especially when processing pipelines are updated mid-study.

- Lineage and provenance: All transformations (e.g., cleaning, noise reduction, and initial feature extraction) should be performed using version-controlled processing logic (e.g., code, configuration files, or parameter sets) to ensure accuracy and reproducibility. Every output must have explicit lineage linking it back to the corresponding raw signal input.

- Validation focus: Analytical validation focuses heavily on the performance of the algorithms and pipelines in this layer.

ALCOA+ principles:

Legible: Uses standardized formats to ensure data remains readable and understandable during processing.

Accurate: Applies error-checking and validation algorithms to correct inaccuracies and maintain integrity.

Roles

Developer: Maintain version-controlled transformation code and configurations. Publish pipeline tests and execution logs for audit.

Adopter: Review and approve the definitions of extracted features to ensure suitability for the study’s analytical needs. Sign off on documentation demonstrating data lineage and pipeline reproducibility.

Layer 4 — Endpoint derivation & analysis readiness

This layer applies the core endpoint logic, computing the final digital measures that will be statistically analyzed. This is the bridge to the statistical analysis plan (SAP).

- End-to-end traceability: Each analysis variable must be clearly traceable back to the original input data and documented transformation steps, with transparent documentation of how the variable is calculated (e.g., formulas, aggregation rules, decision logic) to support reproducibility and auditability.

ALCOA+ principle:

Accurate: Cross-references derived data against benchmarks and protocols to ensure clinical relevance and precision.

Roles

Developer: Implement the endpoint derivation algorithms. Maintain the traceability table (sample-level data → features → endpoint → SDTM/ADaM variables).

Adopter: Define and own clinical thresholds for endpoint derivation. Confirm mapping to CDISC SDTM/ADaM standards and verify export processes.

Layer 5 — Presentation & governed access

The final layer manages how the data is used and accessed by stakeholders—from internal dashboards to external regulatory archives.

- Role-based access: Role-based access controls must be in place to ensure least-privilege, meaning users only see the data necessary for their specific role (e.g., a site coordinator sees only their site’s data). Access grants, changes, and use should be logged to support auditability and oversight.

- Archival and retention: All trial data must be archived in a durable electronic data repository that protects the data against unauthorized alteration or loss, meeting long-term record retention requirements.

ALCOA+ principle:

Legible: Employs user-friendly formats to keep data clear and accessible over time, including for archival purposes.

Roles

Developer: Deliver role-scoped monitoring dashboards, alerts, and error logs. Maintain SBOM and vulnerability processes where applicable. Define and own security and privacy protocols (HIPAA/GDPR).

Adopter: Verify participant-control and withdrawal workflows. Own release approvals to the analysis repository.

This model adapts recognized IoT models (ISO/IEC 30141, IEEE 2413-2019) and aligns with key standards, including FDA 21 CFR Part 11, ALCOA+ data integrity principles, CDISC SDTM/ADaM, and DiMe’s Sensor Data Architecture Framework. It also incorporates recent FDA 2023 guidance on digital health technologies and AI-enabled software. It is adapted here for sDHT-enabled trials.

Special considerations for layer 3 & 4

AI/ML integrity

As artificial intelligence (AI) and machine learning (ML) increasingly power sDHTs—particularly for real-time signal interpretation and endpoint calculation— adopters and developers are responsible for not only validating these tools prior to trial launch, but also ensuring their safe and consistent performance throughout the study.

While AI/ML-powered sDHTs are subject to the same foundational evaluation principles (V3+) as traditional algorithms, they require specialized monitoring controls to manage the risk of performance degradation. Monitoring is focused strictly on maintaining the integrity of the single, locked model used for the trial endpoint. Regulators have made clear that AI/ML-enabled systems must be monitored, version-controlled, and governed under a documented oversight framework, especially in decentralized or remote settings. In this context, monitoring is focused strictly on maintaining the integrity of the single, locked model used for the trial endpoint.

IN PRACTICE

Key considerations

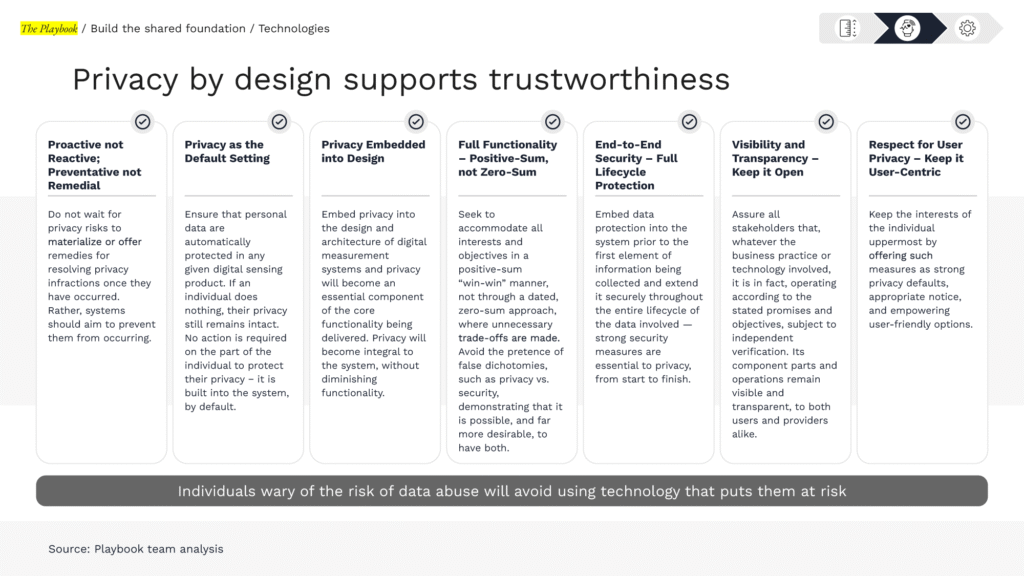

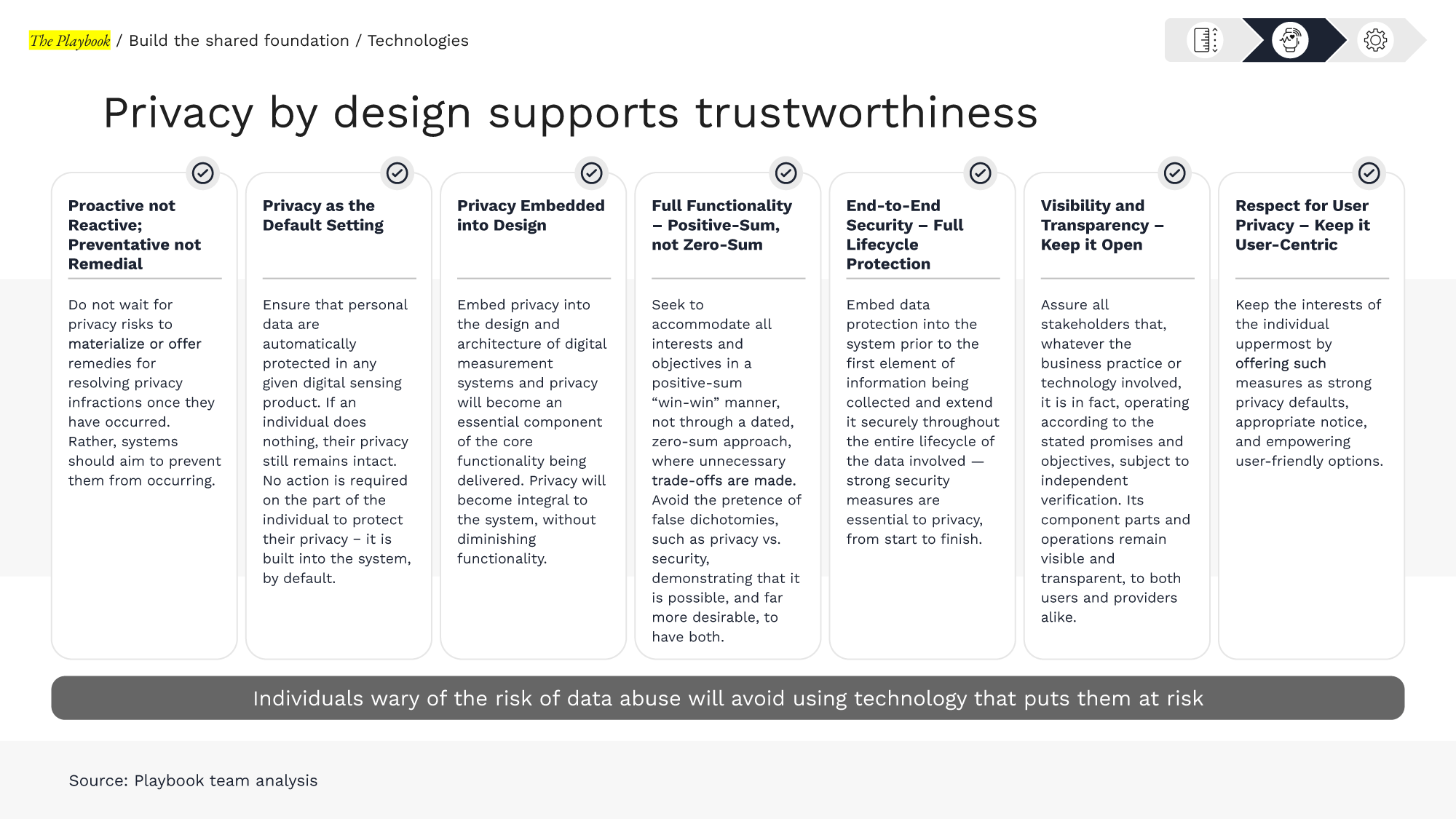

sDHTs can generate large volumes of continuous, high-resolution data—often passively captured and transmitted in the background. Even when technically compliant, unclear communication about data collection, use, and safeguards—particularly between study teams and participants—can erode trust.

Study teams should make privacy protections and participant control explicit, understandable, and operable across the full data lifecycle.

For additional helpful context around sDHT privacy and data best practices, visit The Playbook: Digital Clinical Measures

Developers: Implement privacy-by-design defaults in applications and dashboards; label raw vs. derived data; instrument audit logs; expose configurable capture settings where protocol-appropriate.

Adopters: Ensure consent language matches actual data practices; verify participant-control workflows (pause, disable, withdraw) are testable; approve access matrices; track and close privacy-related incidents.

PRO TIP

Resources from DiMe’s Sensor Data Integrations (SDI) project are highly relevant to this section, providing consensus best practices for establishing technical and governance controls in clinical trials.

- Data Architecture Toolkit:

Including Logical Data Architecture and Data Flow Design Tool

- Sensor Data Standards Toolkit:

Including Interactive Landscape of Standards and Library of Standards

- SDI Implementation Toolkit:

Including guidance on the ART Criteria (Accessible, Relevant, Trustworthy)

- Organizational Readiness Toolkit:

Including Capabilities Maturity Model

EXAMPLES

Sample consent language excerpt

“This study uses a digital device that collects data continuously, including while you sleep or go about your daily life. We will collect [describe types of data] through this device. The data will be encrypted and stored securely. You may withdraw from the study at any time, and you may also ask us to stop collecting data from your device, even if you choose to continue other parts of the study. Please note that data already collected may be retained as part of the study dataset unless you specifically request otherwise.”

Did you know?

Study Data Tabulation Model (SDTM), is a clinical data standard developed by Clinical Data Interchange Standards Consortium (CDISC) that defines a consistent structure for organizing and formatting data from human and nonclinical studies for regulatory submissions.

“SDTM provides a standard to streamline processes in collection, management, analysis and reporting. Implementing SDTM supports data aggregation and warehousing; fosters mining and reuse; facilitates sharing; helps perform due diligence and other important data review activities; and improves the regulatory review and approval process. SDTM is also used in non-clinical data (SEND), medical devices and pharmacogenomics/genetics studies.

SDTM is one of the required standards for data submission to FDA (U.S.) and Pharmaceuticals and Medical Devices Agency (PMDA) (Japan).”

– CDISC

KEY TAKEAWAYS

Building trustworthy data

Architecture, privacy, and oversight are key elements in good data management.

Build the production data pipeline, map controls to ALCOA+ principles, and specify the minimal artifacts (manifests, processes, logs, dashboards) that prove control. Risk-based oversight and data-handling discipline are consistent with current FDA/ICH expectations.

5.1 DATA MATTERS

Library resources to guide you

The sDHT roadmap library gathers 200+ external resources to support the adoption of sensor-based digital health technologies. To help you apply the concepts in this section, we’ve curated specific spotlights that provide direct access to critical guidance and real-world examples, helping you move from strategy to implementation.

Features essential guidance, publications, and communications from regulatory bodies relevant to this section. Use these resources to inform your regulatory strategy and ensure compliance.

Gathers real-world examples, case studies, best practices, and lessons learned from peers and leaders in the field relevant to this section. Use these insights to accelerate your work and avoid common pitfalls.