4.4 Clinical validation

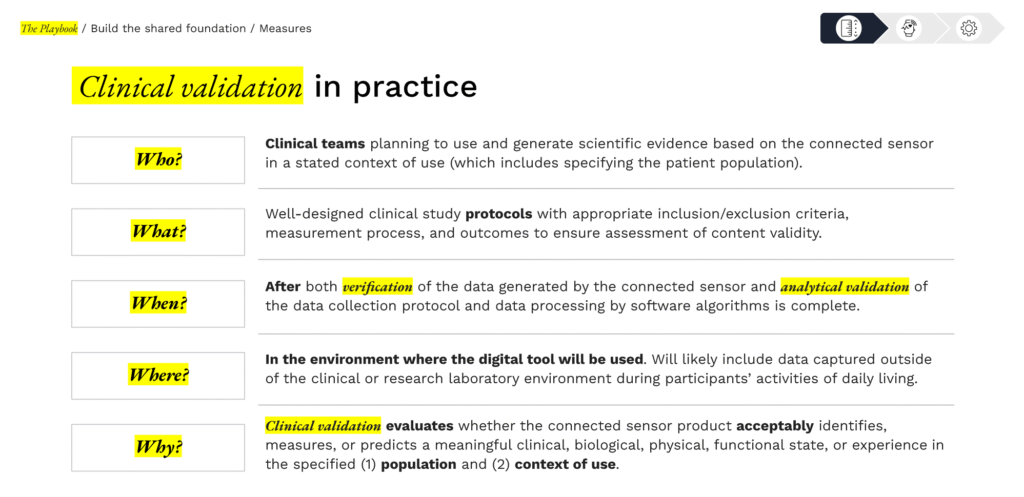

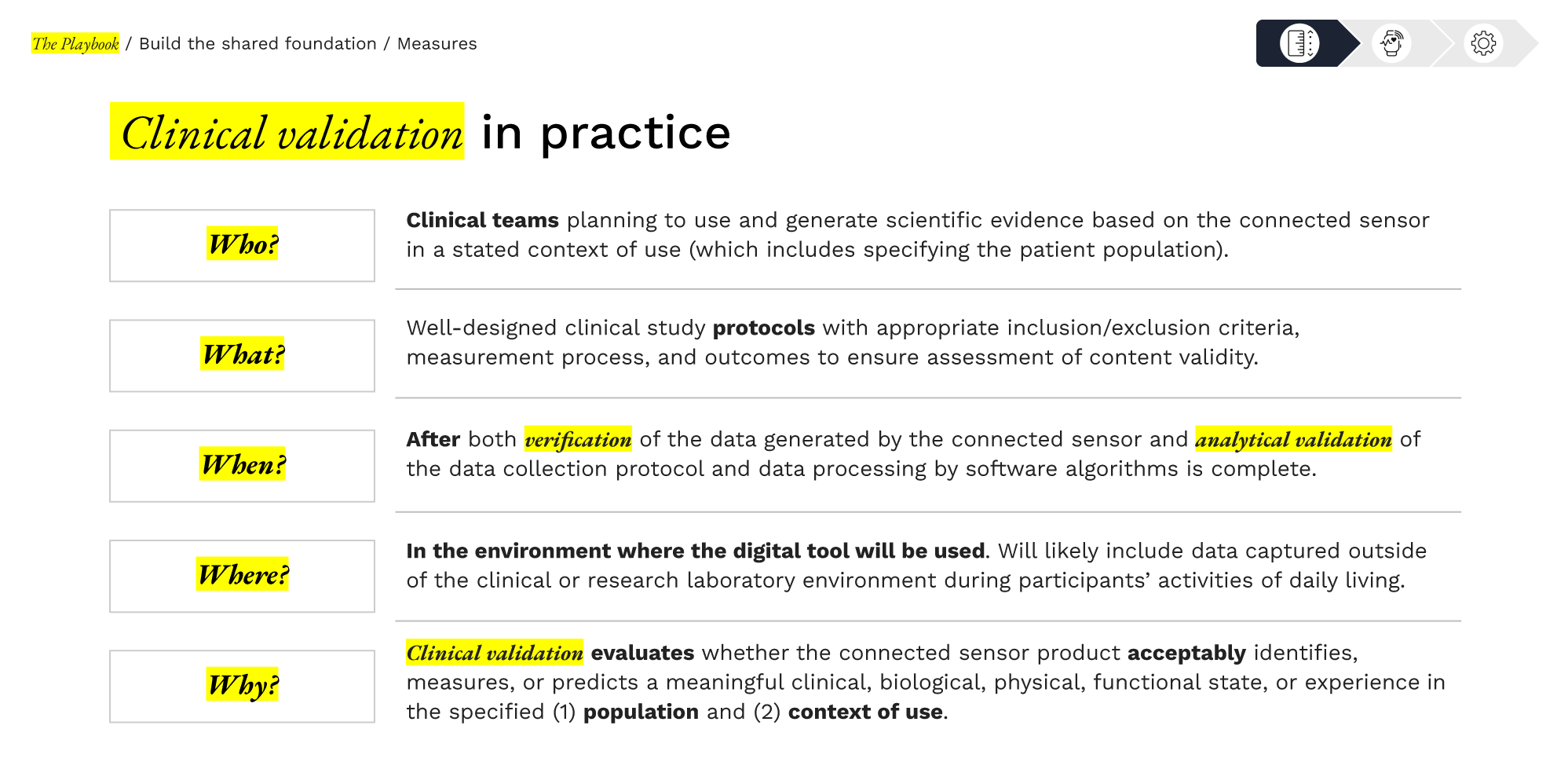

Clinical validation is the process of demonstrating that an sDHT-derived measure is meaningful in a clinical context.

Even if an sDHT’s sensor and algorithm work perfectly (verification and analytical validation passed), we must show that the resulting measure correlates with disease status, predicts outcomes, or otherwise has value in the intended context of use.

Clinical validation answers the pivotal question:

“Does this digital measure reflect something clinically important, and can it inform decisions about patient health?”

Overview

Demonstrating clinical relevance

Clinical validation evaluates whether the digital measure acceptably identifies, measures, or predicts a meaningful clinical, biological, physical, or functional state in the target patient population and context of use.

This often means correlating the sDHT measure with clinical endpoints (e.g., does a wearable activity measure distinguish between patients with varying disease severity?) or demonstrating it can track clinically meaningful changes (e.g., improvement in a digital symptom score corresponds to improvement in a traditional clinical scale).

Essentially, clinical validation provides the evidence that using the digital measure will yield medically interpretable and actionable insights.

Methods & key considerations:

Clinical validation is highly context specific. Key elements include:

Strategic Value

Clinical validation demonstrates clinical meaningfulness. For regulatory acceptance, clinical validation is critical if the digital measure will support labeling claims (e.g., used as a primary or secondary endpoint in a pivotal trial). Regulators and adopters need to see that the digital endpoint has a proven relationship to clinical benefit or outcome. One pathway to formalize this is through the FDA’s qualification programs. For instance, the FDA can “qualify” a digital measure as a Drug Development Tool, meaning it’s endorsed for use in multiple drug development programs. In 2023, FDA qualified a smartwatch-derived measure (atrial fibrillation burden) as a Drug Development Tool for use in trials. Such achievements often require robust clinical validation data pooled across studies. Once qualified or validated, such measures can be used by any sponsor, illustrating how early investment in validation can pay off broadly.

From a clinical benefit perspective, a validated digital measure can improve trial design and patient care. It might enable remote or continuous monitoring of outcomes that were previously hard to capture. For example, if you validate that a digital sleep measure correlates with depression severity, you could use it to evaluate treatment response more objectively or catch early signs of relapse. The strategic value also extends to payers and healthcare systems: a clinically validated digital endpoint can support reimbursement arguments by showing real-world impact. (This section primarily targets regulatory and scientific validation, but thinking ahead, evidence of clinical relevance is exactly what payers will look for when assessing a new digital technology’s value.)

Collaboration & burden sharing

Clinical validation sometimes falls to the adopter or end user of the sDHT—most commonly a pharmaceutical sponsor incorporating the technology into a trial—because sponsors control study conduct and have access to participants and outcome data. However, developers are essential partners: they help ensure the technology is deployed correctly, advise on protocol guardrails, and support analysis and troubleshooting. A recurring challenge is “developer–adopter tension”: given the resource-intensive nature of developing new clinical validation, developers may hope adopters will run clinical validation inside their programs, while adopters may prefer technologies that arrive with convincing validation to reduce time and cost.

Durable solutions use shared-risk approaches that align incentives, distribute workload, and build evidence multiple parties can rely on. Three approaches can help teams share the validation burden and accelerate progress.

Option 1: Precompetitive consortia

examples

Concrete examples show how this model works in practice.

The multicenter WATCH-PD program evaluated smartphone- and smartwatch-based measures of Parkinson’s disease across 17 U.S. sites, engaging multiple stakeholders (industry, regulators, investigators, and people living with PD) and publishing open-access longitudinal results that others can build on. This collaborative design produced generalizable evidence on gait, tremor, and other digital measures over 12 months in early, untreated PD—evidence intended to inform future trials beyond any single sponsor.

The European RADAR-CNS consortium (an Innovative Medicines Initiative/IHI public–private partnership) brought together pharmaceutical companies, academic centers, and technology partners to develop and evaluate remote assessment approaches for neurological and psychiatric conditions using wearables and smartphones, releasing platforms and peer-reviewed outputs for community reuse. By distributing costs and consolidating data across sites and sponsors, RADAR-CNS advanced digital biomarker validation faster than any single party could have achieved alone.

PRO TIP

DATAcc by the Digital Medicine Society (DiMe), in collaboration with FDA’s Center for Devices and Radiological Health, convenes industry, academia, patient groups, and regulators to co-develop open resources and shared evidence for digital health measurement. Organizations can join active, time-boxed projects or propose new ones, contribute use cases to open libraries, and participate in a standing, multi-stakeholder steering community. This is a practical on-ramp for adopters and developers who want to share cost and accelerate validation in a precompetitive setting. Why participate in a collaborative community?

✓ Visibility and reuse: Spotlight your implementations, publish case studies, and help shape field standards that lower downstream integration and submission burden.

✓ Shared work, shared wins: Pool costs and data to create generalizable evidence and reusable tools (e.g., core measures, calculators, implementation guides).

✓ Regulatory adjacency: Engage alongside FDA’s Digital Health Center of Excellence within a recognized “collaborative community” model that advances regulatory science.

Option 2: Milestone-tied pilots with shared data rights

Shared validation studies can be structured so financial commitments scale with predefined validation milestones—such as enrollment thresholds, reliability/validity criteria, prespecified missingness limits, and sufficient subgroup representation—with pooled analyses and joint publications agreed in advance.

Adopters, this approach lowers up-front spend and surfaces feasibility and performance signals earlier; it also yields documentation that maps cleanly to FDA’s recommendations for using sDHTs in clinical investigations (clear context of use, appropriate participant characteristics, and prospective data-quality methods).

Developers, this approach can give you shared rights to pooled datasets and publications, and earlier, real-world feedback to guide product refinements and build market visibility without carrying the entire study cost alone.

Option 3: Joint adopter–developer working groups

As outlined in the Section 2.3: Stakeholder alignment, teams are most effective when the right people work together from the start—clinical leads, statisticians, data managers, quality/regulatory partners, and product or engineering leads. This group can shape the validation plan together, agree on timelines, and make roles and decision rights explicit.

Adopters, this approach helps ensure the study design reflects how the sDHT actually performs in practice, builds clear plans for verification and day-to-day monitoring, and keeps decisions and rationales documented in one place. That shared record makes subsequent conversations with regulators more straightforward, whether the digital endpoint will be used routinely in trials or is being prepared for a future qualification pathway. Integrating developer expertise appropriately can mean fewer redesigns and delays; coordinated decisions across clinical and technical teams; a single, traceable narrative that supports regulatory interactions.

Developers, being smart partners with adopters can provide early visibility into protocol assumptions and data standards; reduced last-minute change requests; and clearer documentation tying technical choices to clinical goals.

Regulatory engagement

4.4 Clinical validation

Library resources to guide you

The sDHT roadmap library gathers 200+ external resources to support the adoption of sensor-based digital health technologies. To help you apply the concepts in this section, we’ve curated specific spotlights that provide direct access to critical guidance and real-world examples, helping you move from strategy to implementation.

Features essential guidance, publications, and communications from regulatory bodies relevant to this section. Use these resources to inform your regulatory strategy and ensure compliance.

Gathers real-world examples, case studies, best practices, and lessons learned from peers and leaders in the field relevant to this section. Use these insights to accelerate your work and avoid common pitfalls.